The University of Oregon’s HPC cluster, Talapas, is comprised of approximately 9,500 cores, 90 TB memory, 120 GPUs, and over 2 PB storage. Talapas is a heterogeneous cluster that includes compute nodes with Intel and AMD processors, Nvidia GPUs, large memory nodes, and large local scratch nodes.

Connectivity throughout the cluster is via 100Gb/sec EDR InfiniBand with Spectrum Scale (GPFS) file system mounted across all cluster nodes.

Numerous programming languages, compilers, and mathematical and scientific libraries are available cluster wide, including over 225 discipline-specific application packages and a large array of Python packages including machine learning specific packages such as TensorFlow, Keras, and PyTorch.

Researchers can access Talapas through a command line interface or an easy-to-use web-based interface.

The Slurm workload manager handles cluster resource management and job scheduling.

Talapas is suitable for high-performance computing in a wide range of disciplines, including but not limited to bioengineering, computer science, data science, economics, education, genomics, linguistics, neuro-engineering, physics, the physical sciences, and psychology. It is also currently used to support teaching and student learning in degree programs across campus, including Bioinformatics, Biology, Business, Chemistry, Computer Science, Genomics, Earth Science, and Physics.

Since its inception in 2018, Talapas has supported over 1,400 researchers across 35 departments and labs and has run over 90 million hours of computation.

Talapas takes its name from the Chinook word for coyote, who was an educator and keeper of knowledge. The RACS team consulted with the Confederated Tribes of the Grand Ronde to choose this name, following a Northwest convention of using American Indian languages when naming supercomputers.

Specifications

The Talapas cluster continues to grow and evolve. Below is the latest snapshot of node specifications available running on Dell PowerEdge C and R servers.

Shared nodes

| Qty | Node Type | Processor (total cores) | Memory | Local disk | Accelerator |

|---|---|---|---|---|---|

| 98 | Compute | dual Intel E5-2690v4 (28 cores) | 128 GB | 200 GB SSD | n/a |

| 24 | GPU | dual Intel E5-2690v4 (28 cores) | 256 GB | 200 GB SSD | quad Nvidia Tesla K80 |

| 2 | Large memory | quad Intel E7-4830v4 (56 cores) | 1 TB | 860 GB SSD | n/a |

| 4 | Large memory | quad Intel E7-4830v4 (56 cores) | 2 TB | 860 GB SSD | n/a |

| 2 | Large memory | quad Intel E7-4830v4 (56 cores) | 4 TB | 860 GB SSD | n/a |

Condo nodes

| Qty | Node Type | Processor (total cores) | Memory | Local disk | Accelerator |

|---|---|---|---|---|---|

| 1 | Compute | dual Intel E5-2690v4 (28 cores) | 128 GB | 200 GB SSD | n/a |

| 1 | Compute | dual Intel E5-2690v4 (28 cores) | 192 GB | 200 GB SSD | n/a |

| 8 | Compute | dual Intel E5-2690v4 (28 cores) | 256 GB | 200 GB SSD | n/a |

| 10 | Compute | dual Intel Gold 6230 (40 cores) | 192 GB | 200 GB SSD | n/a |

| 8 | Large local scratch | dual Intel Gold 6230 (40 cores) | 384 GB | 9 TB SSD | n/a |

| 7 | Compute | dual Intel Gold 6148 (40 cores) | 192 GB | 200 GB SSD | n/a |

| 60 | Compute | dual Intel Gold 6148 (40 cores) | 384 GB | 200 GB SSD | n/a |

| 1 | Compute | dual Intel Gold 6148 (40 cores) | 416 GB | 200 GB SSD | n/a |

| 2 | Large memory | dual Intel Gold 6148 (40 cores) | 768 GB | 200 GB SSD | n/a |

| 26 | Compute | dual Intel Gold 6248 (40 cores) | 192 GB | 200|420 GB SSD | n/a |

| 1 | Compute | dual Intel Gold 6248 (40 cores) | 384 GB | 200 GB SSD | n/a |

| 1 | Compute | dual Intel Gold 6248 (40 cores) | 384 GB | 420 GB SSD | n/a |

| 4 | GPU | dual Intel Gold 6248 (40 cores) | 384 GB | 420 GB SSD | quad Nvidia V100 |

| 1 | Large memory | dual Intel Platinum 8280L (56 cores) | 6 TB | 420 GB SSD | n/a |

| 3 | Compute | dual AMD EPYC 7413 (48 cores) | 256 GB | 420 GB SSD | n/a |

| 1 | Large memory | dual AMD EPYC 7413 (48 cores) | 1 TB | 420 GB SSD | n/a |

| 1 | GPU | dual AMD EPYC 7542 (64 cores) | 512 GB | 860 GB SSD | dual Nvidia A100 |

| 3 | GPU | dual AMD EPYC 7543 (64 cores) | 1 TB | 860 GB SSD | triple Nvidia A100 |

Storage

Talapas provides over 2 Petabytes of capacity on its Spectrum Scale parallel file system (GPFS) served from an Elastic Storage System (ESS) in addition to access to local SSD scratch disk space for I/O intensive workloads. By default, each account on Talapas is configured with an individual home directory and project space. Snapshots are available (home and project spaces) enabling a researcher to conveniently retrieve accidentally lost files. Additionally, access to external storage via UO Cloud, Google Drive, and Dropbox is available for backup/archive.

| Appliance | Enclosures | Drives | Filesystem | Usable Space |

|---|---|---|---|---|

| IBM Elastic Storage System | ESS 3200 and ESS 5000 SL2 | 15 TB NVMe SSD and 16 TB SAS | GPFS | 2.1 PB |

Large Data Transfer

Transferring data to and from Talapas is a common requirement in research computing and can be especially time-consuming when working with large datasets. The UO subscribes to Globus, which enables researchers fast, secure, and reliable data transfers between your desktop/laptop and Talapas, and access to data stored on a variety of national research clusters. Talapas provides a dedicated Globus data transfer node connected via 100Gb/sec Ethernet to facilitate large data transfers.

Network

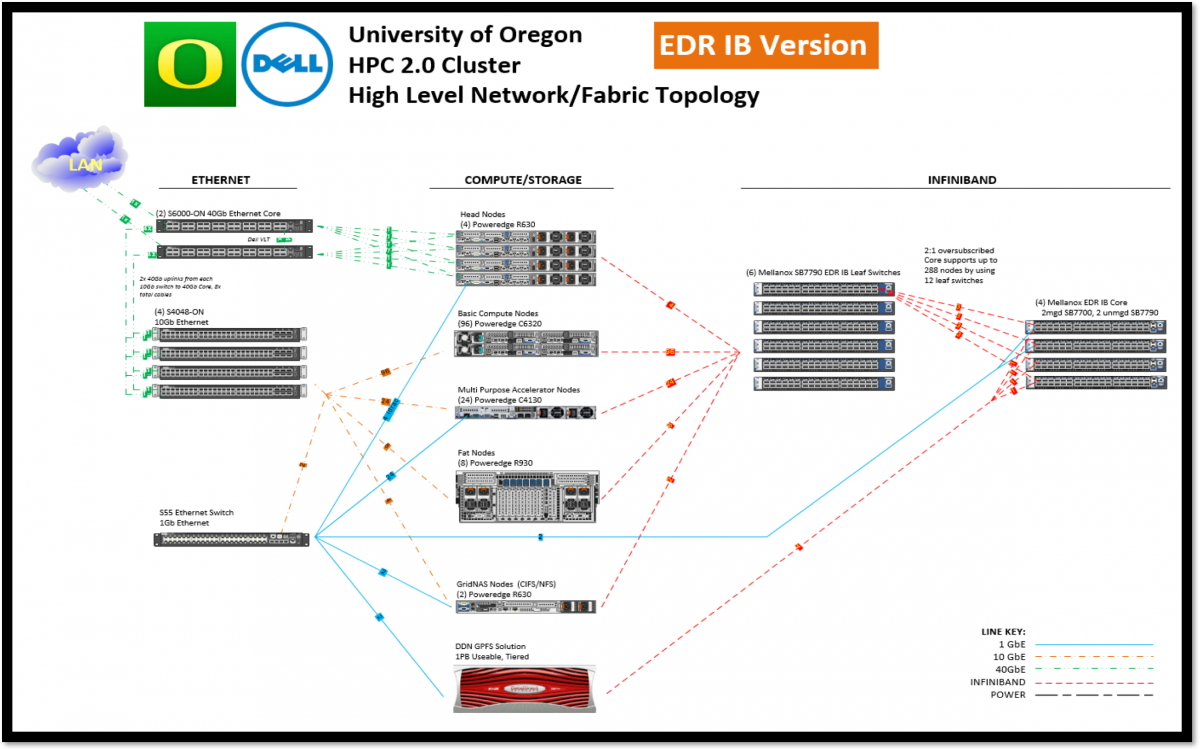

Talapas cluster nodes are connected to both a 10Gb/sec Ethernet management network and a 100Gb/sec EDR InfiniBand (IB) high-speed/low-latency network. The IB network is configured in a fat-tree topology with 2:1 blocking ratio from node to core and unblocked between nodes on each leaf switch. The GS14K and ESS storage cluster nodes are directly connected to the IB core.

Diagram from initial Talapas deployment:

Image

Location

Talapas is located on-premises at the University of Oregon in the Allen Hall Data Center.

Citations and Acknowledgement

Please use the following language to acknowledge Talapas in any publications or presentations of work that made use of the cluster:

This work benefited from access to the University of Oregon high performance computing cluster, Talapas.